Quality Estimation for Machine Translation

07/09/2020

Quality estimation for machine translation is an active field of research in the NLP community. As a method that does not require access to reference translations, it may very well become a standard evaluation tool for translation and language data providers in the future.

Author

NLP Research Analyst at TAUS with a background in linguistics and natural language processing. My mission is to follow the latest trends in NLP and use them to enrich the TAUS data toolkit.

Related Articles

by David Koot

by David Koot09/01/2026

TAUS EPIC API's customizable Quality Estimation models can enhance translation workflows and meet specific needs without requiring in-house NLP expertise.

05/12/2025

Explore how TAUS EPIC API's Quality Estimation can revolutionize translation workflows, that offer scalable, domain-specific solutions for Language Service Providers without the need for in-house NLP experts.

30/10/2025

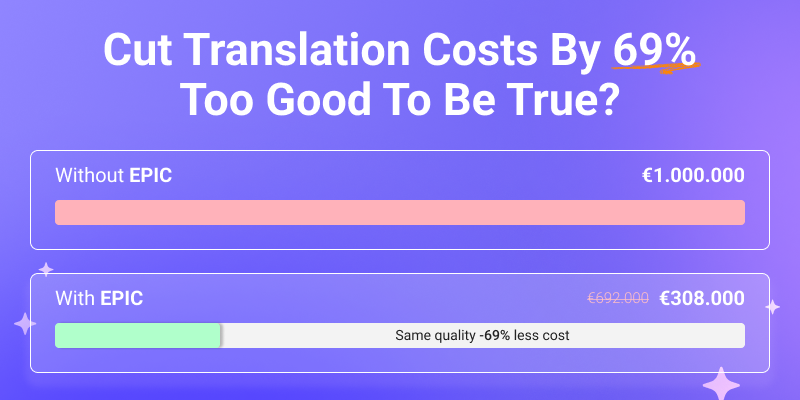

See your translation ROI with Quality Estimation (QE) and Automatic Post-Editing (APE). Find out how EPIC can reduce post-editing costs by up to 70% while improving efficiency.