Language Data in an Idea Pot: Notes from the TAUS Data Summit 2018

What are the trends and issues that data experts discuss today? What are the translation data challenges? Find it all in the reflections from the TAUS Data Summit 2018.

The TAUS Data Summit 2018 hosted by Amazon in Seattle, brought owners and producers of language data together with the ‘power users’ and MT developers to learn from each other and to find common ground and ways to collaborate. This blog aims to give you an impression of the highlights and hot topics from this event.

Language data functions as a vital tool to drive the technology forward. It can be used in many ways, for example for leveraging translation, increasing MT performance, voice assistants as well as many natural language processing (NLP)/machine learning (ML) applications we cannot yet even envision. Based on current practices, bilingual language data comes from either translation memories (TMs) which output high-quality data produced during the translation process or from the web where data of varying formats and quality can be crawled. As an alternative, the TAUS Data Cloud, an industry-shared data repository following a reciprocal model including 30+ billion words in 2200 languages, was introduced in the use case of training and customizing MT engines. However, it turns out that a shared, reciprocal model creates mainly a one-sided market with translation buyers. When it comes to data, buyers are in abundance while providers are hard to find.

Why do people NOT share data and/or TMs?

The following potential reasons were offered by the attendees:

- There are concerns over ownership and intellectual property.

- It is cumbersome, time-consuming and takes extra effort.

- There are concerns over the quality of data.

- There is not enough data available in the wanted domains and language pairs.

TAUS Director, Jaap van der Meer, made a few suggestions in his presentation to help clarify copyright on translation data. We should:

- Acknowledge the differences in copyright in North America and Europe: “fair use” in North America, “sui generis rights” on databases in Europe

- Distinguish between source and target.

- Introduce a new legal definition for “Translation Data”.

He then introduced a Data Market Model instead of an industry-shared model to address the difficulties about data sharing and copyright issues.

Based on these, the TAUS Data Roadmap was defined with the following features:

- Data Market: buy, sell and bid on translation data.

- Data Matching: discover data most similar to your project.

- Premium API: API for MT developers and power users to provide Data Cloud. services from within their own platforms to their customers.

- Feedback and tracking: number of sample views and usage.

- And to be expanded with Speech Data.

Scenario-based Applications of Cross-language Big Data

With the new neural technology wave, we see how data also make many other devices and apps become more intelligent and ambient. Chatbots for customer support, self-driving cars, smart home devices, you name it, they will not work unless we feed them with data. Data from real life and real people, how we speak and interact. In the business section of the Summit, the business opportunities around data were discussed, opened with the use case presentation by Allen Yan (GTCOM). Allen shared the evolution of GTCOM from a Multilingual Call Center in 2013 to using Big Data+AI for scientific research in 2018.

Part of the new concept of “cross-language big data” is being able to analyze multilingual algorithms. GTCOM NLP algorithm platform supports 10 types of languages and provides 51 types of external algorithm services, which is impressive when compared to Baidu or Alibaba platforms that both support Chinese only and provide fewer external algorithm services. Allen suggested that today we are facing the MT+Big data+AI combination. The goal is to build the man-machine coordination for language services. The discussions around whether machines will replace human still continue.

“From our perspective, the future lies in man and machine coordination” adds Allen. To showcase his perspective, he presented a video subtitling demo where MT does the translation and the human fixes the translation within the interface. He then showed the LanguageBox in action; a conference interpretation device that processes the speech data in real time and provides a simultaneous verbal translation in another language. The demos from Chinese into English and into Dutch were impressive, which led to some questions from the audience about the back-end arrangement of the device. Allen responded “We use SMT and NMT combined. We clean the data and use it in training of the MT. We invest 45 million USD annually in improving the domain-specific MTs.”

Data Pipeline: 3 Challenges

Emre Akkas from Globalme explained that the human learning experience is actually very similar to how machines learn, based on experiences with his own baby daughter. Babies, just like machines, collect data every day to capture all these data points, classify them to use in practice in the future. Computer scientists utilize the same method to teach computers to perform certain tasks.

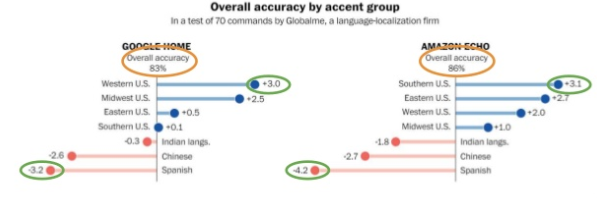

Approximately how many hours of speech data do you need to train a basic ASR engine? Obviously the more the better, but around 300 hours would be a decent amount to do that. More significant than the amount of data, managing the data to enable the connection between various data points is crucial. The hardest part of this equation is the data bias, according to Emre. We all understand that people raised in different regions with different levels of ethnic diversity would behave differently. Well, the same applies to machines.

This visual shared by Emre clearly shows that the accuracy rate measured for Google Home and Amazon Echo changes based on accent groups that directly relate to ethnic background. Providing quite some food for thought, the presentation ended with a difficult question: what if, due to this, we create/train machines that are more racist than humans?

This visual shared by Emre clearly shows that the accuracy rate measured for Google Home and Amazon Echo changes based on accent groups that directly relate to ethnic background. Providing quite some food for thought, the presentation ended with a difficult question: what if, due to this, we create/train machines that are more racist than humans?

Idea Pot from the Brainstorming Session

After setting the stage for the different themes, all the data experts in the room got together in smaller groups to study the themes and come up with answers or even more questions. Here are some of the takeaways and solutions from the brainstorming session.

- Capturing a lot of metadata and context should become a natural part of the data business.

- How do you attach value to data? The idea for an industry-shared marketplace is to set a base price and let people bid. The market dynamic should define the price.

- Acquiring intelligence from the content lifecycle: using MT to translate a webpage and then making the decision to later improve it based on website traffic was suggested as a best practice in managing available resources effectively.

- Anonymization of data is required to wipe away concerns from data owners about brand names being involved in the language data. GDPR compliance is vital in making sure the data is anonymous. In the Data Marketplace, there will have to be an automatic anonymization service. But it is at the initial responsibility of the data uploader.

- There needs to be some guidance and rules on anonymization and privacy of data. TAUS was asked to help gather these.

- How would you be motivated to sell your data? As a potential seller, an LSP representative stated that it is hard to get access to useful machine translation. “As an LSP, we’d be willing to provide our human translated output to improve the quality of the engine if we were able to use the results of the trained engine,” said the participant.

- We could do a market research to find more profiles of people who would be willing to sell data.

- The biggest challenges in collecting data can be defined as not having an immediate ROI on data as well as agreeing on how to use and to choose from the various big data organizations.

- Making sure that the MT engine is trained with the latest terminology is an issue that requires an automated solution.

- There are all kinds of niche cases (such as voice recognition data) and the data available in the industry don’t really support those niches.

- Are the end-users appreciating the improved quality? Based on research, most end-users are happy with the good enough quality and would care less about the quality improvement.

- Quality evaluation of data and putting the human in the loop could be as costly as translating from scratch. Calculating the balance here is important.

- The data that you encounter on the web is not data diversity. Mostly it’s travel domain and junk content. Valuable items are very limited.

- In order to align the data better, we have to be aware of the semantic clues about the language and dialect. (Arabic numerals, etc.)

- Random sampling can be used as a method of cleaning data.

And the following questions and concerns were raised:

- How do we ensure and control that the data uploaded for research purposes is not used commercially?

- Trust is still an issue: establishing trust between participants of data trade and clarifying for both sides how the data will be used are crucial and how to achieve this is not yet solved.

- Emojis appear as a growing area for data collection. How do we deal with that?

- Most data shared in the context of language data is coming from TMs which are often segmented. So how can we keep the context relevant?

- How do you justify investments in data purchases when you don’t know how useful the whole dataset will be in your particular case? In edited MT output, edit distance can be meaningful in deciding whether it’s valuable or not. But when you only use raw MT output, how will you know the real return of that investment?

- ML-driven scoring model is needed to be able to evaluate the value of the data.

- Manufacturing data efforts have taken place but not much has been achieved. For voice data, an artificial environment can be created to manufacture data but for text, creating an artificial environment doesn’t seem to be a viable option.

- When you gather publicly available data and resell it, could that be seen as data sharing?

Şölen is the Head of Digital Marketing at TAUS where she leads digital growth strategies with a focus on generating compelling results via search engine optimization, effective inbound content and social media with over seven years of experience in related fields. She holds BAs in Translation Studies and Brand Communication from Istanbul University in addition to an MA in European Studies: Identity and Integration from the University of Amsterdam. After gaining experience as a transcreator for marketing content, she worked in business development for a mobile app and content marketing before joining TAUS in 2017. She believes in keeping up with modern digital trends and the power of engaging content. She also writes regularly for the TAUS Blog/Reports and manages several social media accounts she created on topics of personal interest with over 100K followers.

by Şölen Aslan

by Şölen Aslan